The PacMan capture-the-flag contest

You are going to compete on a variant of the classic PacMan game. On the last day, we will have a PacMan Tournament where your agents can prove their ability.

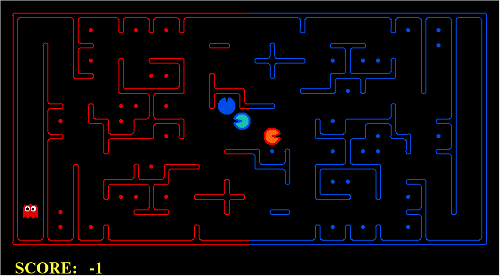

There are two teams: “Blue” and “Red”, each consisting of two agents on screen. The maze is split into to halves. When agents are located in their half of the maze, they are ghosts and can “eat” opponent PacMans. When they move to the opponent half, they become PacMans and can eat food. When one agent gets eaten, it is “reborn” as a ghost at the farthest corner of its own half.

Score and Game Over

At the beginning the score is 0.

Each food item eaten by the “Red” team adds +1 to the overall score and each food item eaten by the “Blue” teams subtracts -1 from the overall score. If an agent takes too long to make a move (default

0.5 seconds, can be changed with the –maxMoveTime command-line switch) its team gets a penalty (default 0.0 points, i.e. no penalty ![]() , it can be changed with the

, it can be changed with the –timePenalty command-line switch), i.e. +timePenalty gets added to the overall score if a “Blue” agent is too slow, or -timePenalty is subtracted from the overall score if a “Red” agent is too slow.

The game is over either when one team eats all available food, or when a predefined timeout is elapsed (default 3000 moves, can be changed with the –time command-line switch).

A final positive score means that the “Red” win, a negative one means that the “Blue” win.

Your Task

You will need to write an algorithm that controls the agents. You will have to design and implement defense and attack strategies, and write planning algorithms to navigate through the maze and dodge/tackle the opponent agents. To test your agents you will be able to let them play against the default ones or our own ones ![]() ! At the end of Day4 we plan to have a tournament: all agents will play against each other and the winner team is going to get a prize (in addition to glory and fame)! Be aware that more important that fancy strategies is the quality of your code: it is well tested? Does it conform to standards? The team with the better pylint score is going to get a prize also!

! At the end of Day4 we plan to have a tournament: all agents will play against each other and the winner team is going to get a prize (in addition to glory and fame)! Be aware that more important that fancy strategies is the quality of your code: it is well tested? Does it conform to standards? The team with the better pylint score is going to get a prize also!

Start the Game

You can start a demo game with:

python capture.py

Try

python capture.py --help

for a list of command-line options.

Acknowledgments

The game is courtesy of John DeNero at the University of California Berkeley, where he is using it for the Artificial Intelligence course. The original page contains a general description of how to run matches and write your own agents. Be aware that we may change some of the rules during the course.

Writing agents 101

AgentFactory

You can let your agents take part to the game by setting your AgentFactory as a factory for the “Red” team like this:

python capture.py --red MyAgentFactory

You can use the –blue option to set the factory for the “Blue” team.

The directory containing your AgentFactory must be found in your PYTHONPATH, and a class named MyAgentFactory must be defined in a file ending in “*gents.py” within your PYTHONPATH.

You can set your PYTHONPATH temporarily with

export PYTHONPATH="/home/student/summerchool/FreakAgents"

or for ever if you add the the previous line to the /home/student/.bashrc file and restart the terminal.

An AgentFactory is a simple object with a method, getAgent, that takes an agent index and returns a new game.Agent instance each time it is called. For example:

class OffenseDefenseAgents(AgentFactory): """Returns one offensive reflex agent and one defensive reflex agent""" def __init__(self, **args): AgentFactory.__init__(self, **args) self.offense = False def getAgent(self, index): self.offense = not self.offense if self.offense: return captureAgents.OffensiveReflexAgent(index) else: return captureAgents.DefensiveReflexAgent(index)

Agent

Writing an Agent is extremely simple: you need to write a sub-class of game.Agent. There are two methods, one for initializing the agent given the initial game state (useful e.g. to analyze the position of food), agent.registerInitialState(game_state), and the most important oneagent.getAction(game_state), which is called at every round and has to return one of game.Directions.{NORTH, SOUTH, EAST, WEST, STOP}.

In the getAction function, the agents most of the time examine the game state (an instance of capture.GameState), and take a decision about what to do next. See below for a description of the methods in GameState.

This is the definition of the mother class:

class Agent: """ An agent must define a getAction method, but may also define the following methods which will be called if they exist: def registerInitialState(self, state): # inspects the starting state """ def __init__(self, index=0): self.index = index def getAction(self, state): """ The Agent will receive a GameState (from either {pacman, capture, sonar}.py) and must return an action from Directions.{North, South, East, West, Stop} """ raiseNotDefined()

This is an example agent that makes moves at random:

import random class BrownianAgent(BasicAgent): def getAction(self, game_state): actions = game_state.getLegalActions(self.index) return random.choice(actions)

BasicAgent

We provide a more advanced base class, basic_agent.BasicAgent, which is more suitable to be used as a base class for your agents, as it defines some helper methods. To implement your own agents, there are two main methods that you should override: register_initial_state, which allows you to initialize your agent before the game begins (e.g., if you want to analyze the maze), and choose_action, which returns the action your agent should perform at each turn, and substitutes Agent.getAction. Make sure you call the parent method of register_initial_state, as it performs some useful operations.

This is a list of the methods defined in BasicAgent:

""" Interesting internal variables: index -- index for this agent is_red -- True if you're on the red team, False if you're blue """ def register_initial_state(self, game_state): """This method handles the initial setup of the agent. You should override it if you have any business to do before the game begins (e.g., analyzing the maze). Make sure to invoke the super method when you override this. """ def choose_action(self, game_state): """Return the next action the agent needs to perform. This is the method you need to override if you want your agent to do something sensible. """ def say(self, txt): """Display a message on screen.""" def get_food(self, game_state, enemy_food=True): """Return the food on the maze belonging to one of the teams. Return a game.Grid instance 'food', where food[x][y] == True if there is food you should eat or protect (base on the value of enemy_food) in that square. Keyword arguments: enemy_food -- If True, returns the food you're supposed to eat, otherwise return the food you should protect. (default: True) """ def get_team_indices(self, game_state, enemy_indices=True): """Return a list of the indices of the agents of one of the teams. Keyword arguments: enemy_indices -- If True, returns the indices of the opposing team, otherwise return the indices of your own team. (default: True) """ def get_team_positions(self, game_state, enemy_pos=True): """Return a list with the position of the members of a team. If an opponent is too far (> 5 square Manhattan distance), the corresponding entry will be None, as its position is unavailable. Keyword arguments: enemy_indices -- If True, returns the positions of the opposing team, otherwise return the position of your own team. (default: True) """ def get_team_distances(self, game_state, enemy_dist=True): """Return a list with the noisy distances from the members of a team. Distances will be returned as the real distance, +/- 6. Keyword arguments: enemy_dist -- If True, returns the distance of the opposing team, otherwise return the distance of your own team. (default: True) """ def get_score(self, game_state): """Return your score. Your score is given by a number that is the difference between your score and the opponents score. This number is negative if you're losing. """

GameState

This object represents the state of the game at some point. You can access game-related data through the following methods (I removed methods that are not useful, and expanded the docstrings if necessary):

def getLegalActions(self, agentIndex=0): """ Returns the legal actions for the agent specified. The function returns a list of strings, as defined in game.Directions """ def generateSuccessor(self, agentIndex, action): """ Returns the successor state (a GameState object) after the specified agent takes the action. With this function you can try out different actions and see in which state you are going to land, useful for implementing Reinforcement Learning agents. """ def getAgentPosition(self, index): """ Returns a location tuple if the agent with the given index is observable; if the agent is unobservable, returns None. """ def getRedFood(self): """ Returns a matrix of food that corresponds to the food on the red team's side. For the matrix m, m[x][y]=true if there is food in (x,y) that belongs to red (meaning red is protecting it, blue is trying to eat it). """ def getBlueFood(self): """ Returns a matrix of food that corresponds to the food on the blue team's side. For the matrix m, m[x][y]=true if there is food in (x,y) that belongs to blue (meaning blue is protecting it, red is trying to eat it). """ def getWalls(self): """ Returns a boolean 2D matrix the size of the game board. getWalls()[x][y] == True if there is a wall at (x,y), False otherwise. You might want to call this function in the registerInitialState phase of your agent if you want to analyze the maze. """ def hasFood(self, x, y): """ Returns true if the location (x,y) has food, regardless of whether it's blue team food or red team food. """ def hasWall(self, x, y): """Returns true if (x,y) has a wall, false otherwise.""" def getRedTeamIndices(self): """ Returns a list of agent index numbers for the agents on the red team. """ def getBlueTeamIndices(self): """ Returns a list of the agent index numbers for the agents on the blue team. """ def isOnRedTeam(self, agentIndex): """ Returns true if the agent with the given agentIndex is on the red team. """ def getAgentDistances(self): """ Returns a noisy distance to each agent. """ def getInitialAgentPosition(self, agentIndex): """Returns the initial position of an agent."""

getWalls, getRedFood, and getBlueFood return a game.Grid object. It works more or less like a 2D list, but if you want to access the data directly you can call Grid.asList().

Keeping track of your opponent

- The game does not allow you to know where your opponent is, unless anybody on your team is within 5 squares from it (Manhattan distance). You will always be able to know the position of your allies using

game_state.getAgentPosition(ally_index). See the functionBasicAgent.get_team_positions.

- You can always obtain your distance from somebody else but it will be contaminated by a +/- 6 uniform random noise. See the function

BasicAgent.get_team_distances.

More useful stuff

- The possible moves as defined in game.Directions are : NORTH, WEST, SOUTH, EAST and STOP. STOP is always possible.

- The class

game.Directionsdefines three dictionaries,LEFT,RIGHT, andREVERSE, that map absolute directions to relative ones (e.g.,REVERSE[NORTH] == SOUTH). - The best way I found to decide whether you've been eaten by a ghost is to compare your current position (

game_state.getAgentPosition(self.index)) with your initial position (game_state.getInitialAgentPosition(self.index)), since it's usually in one of the corners, and you're unlikely to go back to it voluntarily. - Testing agents is really useful: the alternative is to run the game until your agent ends up in the correct game situation, then guess by looking at the screen if the behavior is correct. Have a look at the testing agents page!